In this series we will tackle the problem of optimizing network access to fetch data from the network, a common theme of networked applications. While it is certainly trivial to fetch data from a server in any modern framework or OS, optimizing the frequency of access to the network, in order to save bandwidth, battery, user frustration, amongst other things, is complex. More so if you want to reduce code duplication, ensure testability, and leave something useful (and comprehensible) for the next engineer to use.

Every year, technological trends emerge and endless lists of areas, tools, systems, etc., which in the new year will dictate progress, innovation, and evolution on the right path of technology and derivatives. Last year I talked about six technological trends, but this year I only intend to focus on three to be more objective.

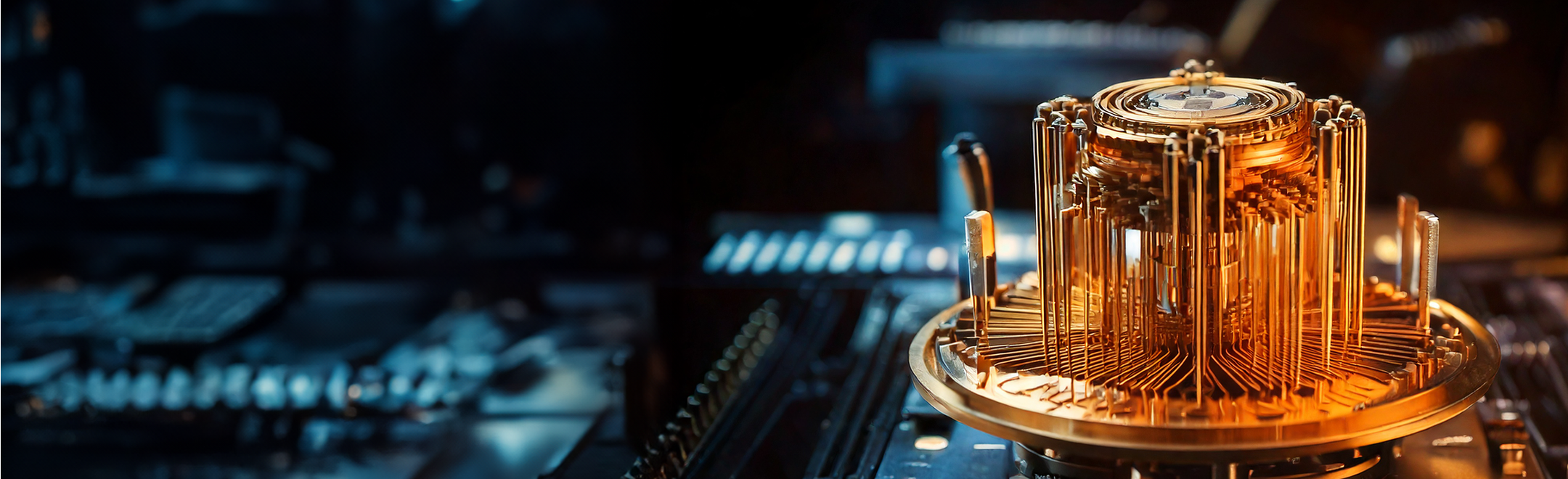

I’ll be talking about generative artificial intelligence, quantum computing, and sustainable technology.